Overview of Data Protection Mechanisms for an Enterprise Data Hub

The goal of data protection is to ensure that only authorized users can view, use, or contribute to a data set. These security controls add another layer of protection against potential threats by end-users, administrators and any other potentially-malicious actors on the network. The means to achieving this goal consists of two parts: protecting data when it is persisted to disk or other storage mediums, commonly called data-at-rest, and protecting data while it moves from one process or system to another, that is, data in transit.

Several common compliance regulations call for data protection in addition to the other security controls discussed in this paper. Cloudera provides security for data in transit, through TLS and other mechanisms - centrally deployed through Cloudera Manager. Transparent data-at-rest protection is provided through the combination of transparent HDFS encryption, Navigator Encrypt, and Navigator Key Trustee.

Continue reading:

Protecting Data At-Rest

For data-at-rest, there are several risk avenues that may lead to unprivileged access, such as exposed data on a hard drive after a mechanical failure, or, data being accessed by previously-compromised accounts. Data-at-rest is also complicated by longevity; where and how does one store the keys to decrypt data throughout an arbitrary period of retention. Key management issues, such as mitigating compromised keys and the re-encryption of data, also complicate the process.

However, in some cases, you might find that relying on encryption to protect your data is not sufficient. With encryption, sensitive data may still be exposed to an administrator who has complete access to the cluster. Even users with appropriate ACLs on the data could have access to logs and queries where sensitive data might have leaked. To prevent such leaks, Cloudera now allows you to mask personally identifiable information (PII) from log files, audit data and SQL queries.

Continue reading:

Encryption Options Available with Hadoop

Hadoop, as a central data hub for many kinds of data within an organization, naturally has different needs for data protection depending on the audience and processes while using a given data set. CDH provides transparent HDFS encryption, ensuring that all sensitive data is encrypted before being stored on disk. This capability, together with enterprise-grade encryption key management with Navigator Key Trustee, delivers the necessary protection to meet regulatory compliance for most enterprises. HDFS Encryption together with Navigator Encrypt (available with Cloudera Enterprise) provides transparent encryption for Hadoop, for both data and metadata. These solutions automatically encrypt data while the cluster continues to run as usual, with a very low performance impact. It is massively scalable, allowing encryption to happen in parallel against all the data nodes - as the cluster grows, encryption grows with it.

Additionally, this transparent encryption is optimized for the Intel chipset for high performance. Intel chipsets include AES-NI co-processors, which provide special capabilities that make encryption workloads run extremely fast. Not all AES-NI is equal, and Cloudera is able to take advantage of the latest Intel advances for even faster performance. Additionally, HDFS Encryption and Navigator Encrypt feature separation of duties, preventing even IT Administrators and root users from accessing data that they are not authorized to see.

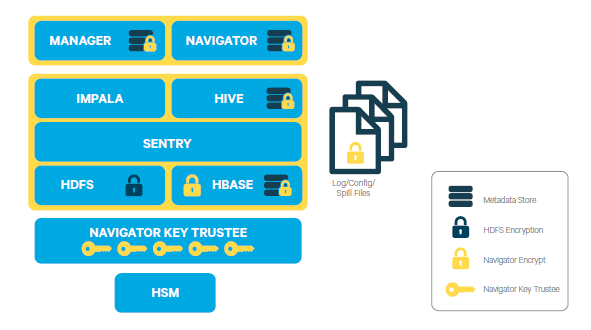

As an example, the figure below shows a sample deployment that uses CDH's transparent HDFS encryption to protect the data stored in HDFS, while Navigator Encrypt is being used to protect

all other data on the cluster associated with the Cloudera Manager, Cloudera Navigator, Hive and HBase metadata stores, along with any logs or spills. Navigator Key Trustee is being used to provide

robust and fault-tolerant key management.

Encryption can be applied at a number of levels within Hadoop:

- OS Filesystem-level - Encryption can be applied at the Linux operating system filesystem level to cover all files in a volume. An example of this approach

is Cloudera Navigator Encrypt (formerly Gazzang zNcrypt) which is available for Cloudera customers licensed for

Cloudera Navigator. Navigator Encrypt operates at the Linux volume level, so it can encrypt cluster data inside and outside HDFS, such as temp/spill files, configuration files and metadata databases

(to be used only for data related to a CDH cluster). Navigator Encrypt must be used with Cloudera Navigator Key Trustee

Server (formerly Gazzang zTrustee).

CDH components, such as Impala, MapReduce, YARN, or HBase, also have the ability to encrypt data that lives temporarily on the local filesystem outside HDFS. To enable this feature, see Configuring Encryption for Data Spills.

- Network-level - Encryption can be applied to encrypt data just before it gets sent across a network and to decrypt it just after receipt. In Hadoop, this

means coverage for data sent from client user interfaces as well as service-to-service communication like remote procedure calls (RPCs). This protection uses industry-standard protocols such as

TLS/SSL.

Note: Cloudera Manager

and CDH components support either TLS 1.0, TLS 1.1, or TLS 1.2, but not SSL 3.0. References to SSL continue only because of its widespread use in technical jargon.

Note: Cloudera Manager

and CDH components support either TLS 1.0, TLS 1.1, or TLS 1.2, but not SSL 3.0. References to SSL continue only because of its widespread use in technical jargon. - HDFS-level - Encryption applied by the HDFS client software. HDFS Transparent Encryption operates at the HDFS folder level, allowing you to encrypt some folders and leave others

unencrypted. HDFS transparent encryption cannot encrypt any data outside HDFS. To ensure reliable key storage (so that data is not lost), use Cloudera Navigator Key Trustee Server; the default Java

keystore can be used for test purposes. For more information, see Enabling HDFS Encryption Using Cloudera Navigator

Key Trustee Server.

Unlike OS and network-level encryption, HDFS transparent encryption is end-to-end. That is, it protects data at rest and in transit, which makes it more efficient than implementing a combination of OS-level and network-level encryption.

Data Redaction with Hadoop

Data redaction is the suppression of sensitive data, such as any personally identifiable information (PII). PII can be used on its own or with other information to identify or locate a single person, or to identify an individual in context. Enabling redaction allow you to transform PII to a pattern that does not contain any identifiable information. For example, you could replace all Social Security numbers (SSN) like 123-45-6789with an unintelligible pattern like XXX-XX-XXXX, or replace only part of the SSN (XXX-XX-6789).

Although encryption techniques are available to protect Hadoop data, the underlying problem with using encryption is that an admin who has complete access to the cluster also access to unencrypted sensitive user data. Even users with appropriate ACLs on the data could have access to logs and queries where sensitive data might have leaked.

Data redaction provides compliance with industry regulations such as PCI and HIPAA, which require that access to PII be restricted to only those users whose jobs require such access. PII or other sensitive data must not be available through any other channels to users like cluster administrators or data analysts. However, if you already have permissions to access PII through queries, the query results will not be redacted. Redaction only applies to any incidental leak of data. Queries and query results must not show up in cleartext in logs, configuration files, UIs, or other unprotected areas.

Scope:

Data redaction in CDH targets sensitive SQL data and log files. Currently, you can enable or disable redaction for the whole cluster with a simple HDFS service-wide configuration change. Redaction is implemented with the assumption that sensitive information resides in the data itself, not the metadata. If you enable redaction for a file, only sensitive data inside the file is redacted. Metadata such as the name of the file or file owner is not redacted.

When data redaction is enabled, the following data is redacted:

- Logs in HDFS and any dependent cluster services. Log redaction is not available in Isilon-based clusters.

- Audit data sent to Cloudera Navigator

- SQL query strings displayed by Hue, Hive, and Impala.

For more information on enabling this feature, see Sensitive Data Redaction.

Password Redaction

Starting with Cloudera Manager and CDH 5.5, passwords will no longer be accessible in cleartext through the Cloudera Manager Admin Console or in the configuration files stored on disk. For components that use core Hadoop such as HDFS, HBase, and Hive, Cloudera Manager Server uses Hadoop's CredentialProvider interface to encrypt and store passwords inside a secure creds.jceks keystore file. For components that do not use core Hadoop, such as Hue and Impala, instead of the password, Cloudera Manager Server uses a password_script = /path/to/script/that/will/emit/password.sh parameter that, when run, writes the password to stdout. Passwords contained within Cloudera Manager and Cloudera Navigator properties have been redacted internally in Cloudera Manager.

However, the database password contained in Cloudera Manager Server's /etc/cloudera-scm-server/db.properties file has not been redacted. The db.properties file is managed by customers and is populated manually when the Cloudera Manager Server database is being set up for the first time. Since this occurs before the Cloudera Manager Server has even started, encrypting the contents of this file is a completely different challenge as compared to that of redacting configuration files.

- In the Cloudera Manager Admin Console, on the Processes page for a given role instance, passwords in the linked configuration files have been replaced by *******.

- On the Cloudera Manager Server and Agent hosts, all configuration files in the /var/run/cloudera-scm-agent/process directory will have their passwords replaced by *******.

- In the Cloudera Manager Admin Console, Advanced Configuration Snippet parameters will be redacted to block sensitive information such as passwords or secret keys. Users who have the

permission to edit the parameter will still see the sensitive words, but read-only users without edit privileges will only see the redacted version.

Redaction of Advanced Configuration Snippet parameters is based on detecting keywords explicitly defined as sensitive in the contents of these parameters. That is, parameters containing the keywords password, key, aws, or secret, will be redacted for users who do not have the required edit privileges. Default values for sensitive fields are not redacted since defaults are published in the public documentation. Default passwords pose a security risk and should not be used in production.

A limitation of this feature is that the list of keywords used to determine sensitive information is currently limited to those listed above and is not configurable using the Cloudera Manager Admin Console.

Protecting Data In-Transit

- HDFS Transparent Encryption: Data encrypted using HDFS Transparent Encryption is protected end-to-end. Any data written to and from HDFS can only be encrypted or decrypted by the client. HDFS does not have access to the unencrypted data or the encryption keys. This supports both, at-rest encryption as well as in-transit encryption.

- Data Transfer: The first channel is data transfer, including the reading and writing of data blocks to HDFS. Hadoop uses a SASL-enabled wrapper around its

native direct TCP/IP-based transport, called DataTransportProtocol, to secure the I/O streams within an DIGEST-MD5 envelope (For steps, see Configuring Encrypted HDFS Data Transport). This procedure also employs secured HadoopRPC (see Remote Procedure

Calls) for the key exchange. The HttpFS REST interface, however, does not provide secure communication between the client and HDFS, only secured authentication using SPNEGO.

For the transfer of data between DataNodes during the shuffle phase of a MapReduce job (that is, moving intermediate results between the Map and Reduce portions of the job), Hadoop secures the communication channel with HTTP Secure (HTTPS) using Transport Layer Security (TLS). See Encrypted Shuffle and Encrypted Web UIs.

- Remote Procedure Calls: The second channel is system calls to remote procedures (RPC) to the various systems and frameworks within a Hadoop cluster. Like data transfer activities, Hadoop has its own native protocol for RPC, called HadoopRPC, which is used for Hadoop API client communication, intra-Hadoop services communication, as well as monitoring, heartbeats, and other non-data, non-user activity. HadoopRPC is SASL-enabled for secured transport and defaults to Kerberos and DIGEST-MD5 depending on the type of communication and security settings. For steps, see Configuring Encrypted HDFS Data Transport.

- User Interfaces: The third channel includes the various web-based user interfaces within a Hadoop cluster. For secured transport, the solution is straightforward; these interfaces employ HTTPS.

SSL/TLS Certificates Overview

There are several different certificate strategies that you can employ on your cluster to allow SSL clients to securely connect to servers using trusted certificates or certificates issued by trusted authorities. The set of certificates required depends upon the certificate provisioning strategy you implement. The following strategies, among others, are possible:

- Certificate per host: In this strategy, you obtain one certificate for each host on which at least one SSL daemon role is running. All services on a given host will share this single certificate.

- Certificate for multiple hosts: Using the SubjectAltName extension, it is possible to obtain a certificate that is bound to a list of specific DNS names. One such certificate could be used to protect all hosts in the cluster, or some subset of the cluster hosts. The advantage of this approach over a wildcard certificate is that it allows you to limit the scope of the certificate to a specific set of hosts. The disadvantage is that it requires you to update and redeploy the certificate whenever a host is added or removed from the cluster.

- Wildcard certificate: You may also choose to obtain a single wildcard certificate to be shared by all services on all hosts in the cluster. This strategy

requires that all hosts belong to the same domain. For example, if the hosts in the cluster have DNS names node1.example.com ... node100.example.com, you can obtain a certificate for *.example.com. Note that only one level of wildcarding is allowed; a certificate

bound to *.example.com will not work for a daemon running on node1.subdomain.example.com.

Note: Wildcard domain certificates and

certificates using the SubjectAlternativeName extension are not supported at this time.

Note: Wildcard domain certificates and

certificates using the SubjectAlternativeName extension are not supported at this time.

For more information on setting up SSL/TLS certificates, continue reading the topics at TLS/SSL Certificates Overview.

TLS Encryption Levels for Cloudera Manager

Transport Layer Security (TLS) provides encryption and authentication in communication between the Cloudera Manager Server and Agents. Encryption prevents snooping, and authentication helps prevent problems caused by malicious servers or agents.

- Level 1 (Good) - This level encrypts communication between the browser and Cloudera Manager, and between Agents and the Cloudera Manager Server. See Configuring TLS Encryption Only for Cloudera Manager followed by Level 1: Configuring TLS Encryption for Cloudera Manager Agents for instructions. Level 1 encryption prevents snooping of commands and controls ongoing communication between Agents and Cloudera Manager.

- Level 2 (Better) - This level encrypts communication between the Agents and the Server, and provides strong verification of the Cloudera Manager Server certificate by Agents. See Level 2: Configuring TLS Verification of Cloudera Manager Server by the Agents. Level 2 provides Agents with additional security by verifying trust for the certificate presented by the Cloudera Manager Server.

- Level 3 (Best) - This includes encrypted communication between the Agents and the Server, strong verification of the Cloudera Manager Server certificate by the Agents, and authentication of Agents to the Cloudera Manager Server using self-signed or CA-signed certs. See Level 3: Configuring TLS Authentication of Agents to the Cloudera Manager Server. Level 3 TLS prevents cluster Servers from being spoofed by untrusted Agents running on a host. Cloudera recommends that you configure Level 3 TLS encryption for untrusted network environments before enabling Kerberos authentication. This provides secure communication of keytabs between the Cloudera Manager Server and verified Agents across the cluster.

TLS/SSL Encryption for CDH Components

Cloudera recommends securing a cluster using Kerberos authentication before enabling encryption such as SSL on a cluster. If you enable SSL for a cluster that does not already have Kerberos authentication configured, a warning will be displayed.

- HDFS, MapReduce, and YARN daemons act as both SSL servers and clients.

- HBase daemons act as SSL servers only.

- Oozie daemons act as SSL servers only.

- Hue acts as an SSL client to all of the above.

For information on setting up SSL/TLS for CDH services, see Configuring TLS/SSL Encryption for CDH Services.

Data Protection within Hadoop Projects

The table below lists the various encryption capabilities that can be leveraged by CDH components and Cloudera Manager.

| Project | Encryption for Data-in-Transit | Encryption for Data-at-Rest

(HDFS Encryption + Navigator Encrypt + Navigator Key Trustee) |

|---|---|---|

| HDFS | SASL (RPC), SASL (DataTransferProtocol) | Yes |

| MapReduce | SASL (RPC), HTTPS (encrypted shuffle) | Yes |

| YARN | SASL (RPC) | Yes |

| Accumulo | Partial - Only for RPCs and Web UI (Not directly configurable in Cloudera Manager) | Yes |

| Flume | TLS (Avro RPC) | Yes |

| HBase | SASL - For web interfaces, inter-component replication, the HBase shell and the REST, Thrift 1 and Thrift 2 interfaces | Yes |

| HiveServer2 | SASL (Thrift), SASL (JDBC), TLS (JDBC, ODBC) | Yes |

| Hue | TLS | Yes |

| Impala | TLS or SASL between impalad and clients, but not between daemons | |

| Oozie | TLS | Yes |

| Pig | N/A | Yes |

| Search | TLS | Yes |

| Sentry | SASL (RPC) | Yes |

| Spark | None | Yes |

| Sqoop | Partial - Depends on the RDBMS database driver in use | Yes |

| Sqoop2 | Partial - You can encrypt the JDBC connection depending on the RDBMS database driver | Yes |

| ZooKeeper | SASL (RPC) | No |

| Cloudera Manager | TLS - Does not include monitoring | Yes |

| Cloudera Navigator | TLS - Also see Cloudera Manager | Yes |

| Backup and Disaster Recovery | TLS - Also see Cloudera Manager | Yes |

| << Overview of Authentication Mechanisms for an Enterprise Data Hub | ©2016 Cloudera, Inc. All rights reserved | Overview of Authorization Mechanisms for an Enterprise Data Hub >> |

| Terms and Conditions Privacy Policy |