Overview of Authorization Mechanisms for an Enterprise Data Hub

Authorization is concerned with who or what has access or control over a given resource or service. Within Hadoop, there are many resources and services ranging from computing frameworks to client applications and, of course, an unbounded amount and number of types of data, given the flexibility and scalability of the underlying HDFS storage system. However, the authorization risks facing enterprises stems directly from Hadoop’s storage and computing breadth and richness. Many audiences can and want to use, contribute, and view the data within Hadoop, and this trend will accelerate within organizations as Hadoop becomes critical to the data management infrastructure. Initially, an IT organization might employ a Hadoop cluster for ETL processing with only a handful of developers within the organization requesting access to the data and services. Yet as other teams, like line-of-business analysts, recognize the utility of Hadoop and its data, they may demand new computing needs, like interactive SQL or search, as well as request the addition of business-sensitive data within Hadoop. Since Hadoop merges together the capabilities of multiple varied, and previously separate IT systems as an enterprise data hub that stores and works on all data within an organization, it requires multiple authorization controls with varying granularities. In such cases, Hadoop management tools simplify setup and maintenance by:

- Tying all users to groups, which can be specified in existing LDAP or AD directories.

- Providing role-based access control for similar interaction methods, like batch and interactive SQL queries. For example, Apache Sentry permissions apply to Hive (HiveServer2) and Impala.

Continue reading:

Authorization Mechanisms in Hadoop

- POSIX-style permissions on files and directories

- Access Control Lists (ACL) for management of services and resources

- Role-Based Access Control (RBAC) for certain services with advanced access controls to data.

Accordingly, CDH currently provides the following forms of access control:

- Traditional POSIX-style permissions for directories and files, where each directory and file is assigned a single owner and group. Each assignment has a basic set of permissions available; file permissions are simply read, write, and execute, and directories have an additional permission to determine access to child directories. (LINKTO)

- Extended Access Control Lists (ACLs) for HDFS that provide fine-grained control of permissions for HDFS files by allowing you to set different permissions for specific named users or named groups.

- Apache HBase uses ACLs to authorize various operations (READ, WRITE, CREATE, ADMIN) by column, column family, and column family qualifier. HBase ACLs are granted and revoked to both users and groups.

- Role-based access control with Apache Sentry.As of Cloudera Manager 5.1.x, Sentry permissions can be configured using either policy files or the database-backed Sentry service.

- The Sentry service is the preferred way to set up Sentry permissions. See The Sentry Service for more information.

- For the policy file approach to configuring Sentry, see Sentry Policy File Authorization.

Important: Cloudera does

not support Apache Ranger or Hive's native authorization frameworks for configuring access control in Hive. Use the Cloudera-supported Apache Sentry instead.

Important: Cloudera does

not support Apache Ranger or Hive's native authorization frameworks for configuring access control in Hive. Use the Cloudera-supported Apache Sentry instead.

Continue reading:

POSIX Permissions

The majority of services within the Hadoop ecosystem, from client applications like the CLI shell to tools written to use the Hadoop API, directly access data stored within HDFS. HDFS uses POSIX-style permissions for directories and files; each directory and file is assigned a single owner and group. Each assignment has a basic set of permissions available; file permissions are simply read, write, and execute, and directories have an additional permission to determine access to child directories.

Ownership and group membership for a given HDFS asset determines a user’s privileges. If a given user fails either of these criteria, they are denied access. For services that may attempt to access more than one file, such as MapReduce, Cloudera Search, and others, data access is determined separately for each file access attempt. File permissions in HDFS are managed by the NameNode.

Access Control Lists

Hadoop also maintains general access controls for the services themselves in addition to the data within each service and in HDFS. Service access control lists (ACL) are typically defined within the global hadoop-policy.xml file and range from NameNode access to client-to-DataNode communication. In the context of MapReduce and YARN, user and group identifiers form the basis for determining permission for job submission or modification.

In addition, with MapReduce and YARN, jobs can be submitted using queues controlled by a scheduler, which is one of the components comprising the resource management capabilities within the cluster. Administrators define permissions to individual queues using ACLs. ACLs can also be defined on a job-by-job basis. Like HDFS permissions, local user accounts and groups must exist on each executing server, otherwise the queues will be unusable except by superuser accounts.

Apache HBase also uses ACLs for data-level authorization. HBase ACLs authorize various operations (READ, WRITE, CREATE, ADMIN) by column, column family, and column family qualifier. HBase ACLs are granted and revoked to both users and groups. Local user accounts are required for proper authorization, similar to HDFS permissions.

Apache ZooKeeper also maintains ACLs to the information stored within the DataNodes of a ZooKeeper data tree.

Role-Based Access Control with Apache Sentry

For finer-grained access to data accessible using schema -- that is, data structures described by the Apache Hive Metastore and used by computing engines like Hive and Impala, as well as collections and indices within Cloudera Search -- CDH supports Apache Sentry , which offers a role-based privilege model for this data and its given schema. Apache Sentry is a granular, role-based authorization module for Hadoop. Sentry provides the ability to control and enforce precise levels of privileges on data for authenticated users and applications on a Hadoop cluster. Sentry currently works out of the box with Apache Hive, Hive Metastore/HCatalog, Apache Solr, Cloudera Impala and HDFS (limited to Hive table data).

Sentry is designed to be a pluggable authorization engine for Hadoop components. It allows you to define authorization rules to validate a user or application’s access requests for Hadoop resources. Sentry is highly modular and can support authorization for a wide variety of data models in Hadoop.

Architecture Overview

Sentry Components

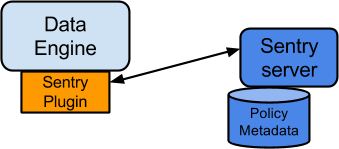

- Sentry Server

The Sentry RPC server manages the authorization metadata. It supports interfaces to securely retrieve and manipulate the metadata.

- Data Engine

This is a data processing application such as Hive or Impala that needs to authorize access to data or metadata resources. The data engine loads the Sentry plugin and all client requests for accessing resources are intercepted and routed to the Sentry plugin for validation.

- Sentry Plugin

The Sentry plugin runs in the data engine. It offers interfaces to manipulate authorization metadata stored in the Sentry Server, and includes the authorization policy engine that evaluates access requests using the authorization metadata retrieved from the server.

Key Concepts

- Authentication - Verifying credentials to reliably identify a user

- Authorization - Limiting the user’s access to a given resource

- User - Individual identified by underlying authentication system

- Group - A set of users, maintained by the authentication system

- Privilege - An instruction or rule that allows access to an object

- Role - A set of privileges; a template to combine multiple access rules

- Authorization models - Defines the objects to be subject to authorization rules and the granularity of actions allowed. For example, in the SQL model, the objects can be databases or tables, and the actions are SELECT, INSERT, and CREATE. For the Search model, the objects are indexes, collections and documents; the access modes are query and update.

User Identity and Group Mapping

Sentry relies on underlying authentication systems such as Kerberos or LDAP to identify the user. It also uses the group mapping mechanism configured in Hadoop to ensure that Sentry sees the same group mapping as other components of the Hadoop ecosystem.

Consider users Alice and Bob who belong to an Active Directory (AD) group called finance-department. Bob also belongs to a group called finance-managers. In Sentry, you first create roles and then grant privileges to these roles. For example, you can create a role called Analyst and grant SELECT on tables Customer and Sales to this role.

The next step is to join these authentication entities (users and groups) to authorization entities (roles). This can be done by granting the Analyst role to the finance-department group. Now Bob and Alice who are members of the finance-department group get SELECT privilege to the Customer and Sales tables.

Role-based access control (RBAC) is a powerful mechanism to manage authorization for a large set of users and data objects in a typical enterprise. New data objects get added or removed, users join, move, or leave organisations all the time. RBAC makes managing this a lot easier. Hence, as an extension of the discussed previously, if Carol joins the Finance Department, all you need to do is add her to the finance-department group in AD. This will give Carol access to data from the Sales and Customer tables.Unified Authorization

Another important aspect of Sentry is the unified authorization. The access control rules once defined, work across multiple data access tools. For example, being granted the Analyst role in the previous example will allow Bob, Alice, and others in the finance-department group to access table data from SQL engines such as Hive and Impala, as well as using MapReduce, Pig applications or metadata access using HCatalog.

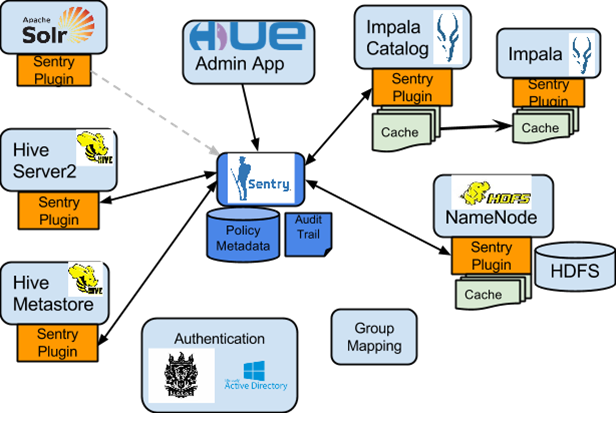

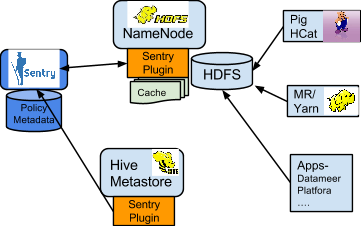

As illustrated above, Apache Sentry works with multiple Hadoop components. At the heart you have the Sentry Server which stores authorization metadata and provides APIs for tools to retrieve and modify this metadata securely.

Note that the Sentry Server only facilitates the metadata. The actual authorization decision is made by a policy engine which runs in data processing applications such as Hive or Impala. Each component loads the Sentry plugin which includes the service client for dealing with the Sentry service and the policy engine to validate the authorization request.

Sentry Integration with the Hadoop Ecosystem

As illustrated above, Apache Sentry works with multiple Hadoop components. At the heart you have the Sentry Server which stores authorization metadata and provides APIs for tools to retrieve and modify this metadata securely.

Note that the Sentry Server only facilitates the metadata. The actual authorization decision is made by a policy engine which runs in data processing applications such as Hive or Impala. Each component loads the Sentry plugin which includes the service client for dealing with the Sentry service and the policy engine to validate the authorization request.

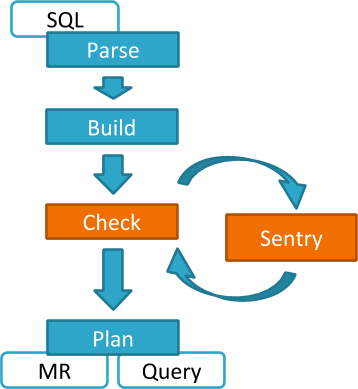

Hive and Sentry

select * from production.salesHive will identify that user Bob is requesting SELECT access to the Sales table. At this point Hive will ask the Sentry plugin to validate Bob’s access request. The plugin will retrieve Bob’s privileges related to the Sales table and the policy engine will determine if the request is valid.

Hive works with both, the Sentry service and policy files. Cloudera recommends you use the Sentry service which makes it easier to manage user privileges. For more details and instructions, see The Sentry Service or Sentry Policy File Authorization.

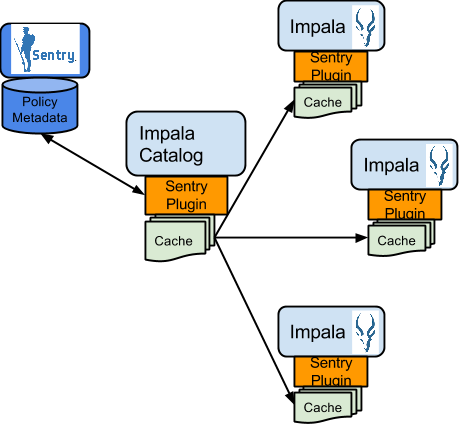

Impala and Sentry

Authorization processing in Impala is similar to that in Hive. The main difference is caching of privileges. Impala’s Catalog server manages caching schema metadata and propagating it to all Impala server nodes. This Catalog server caches Sentry metadata as well. As a result, authorization validation in Impala happens locally and much faster.

For detailed documentation, see Enabling Sentry Authorization for Impala.

Sentry-HDFS Synchronization

Sentry-HDFS authorization is focused on Hive warehouse data - that is, any data that is part of a table in Hive or Impala. The real objective of this integration is to expand the same authorization checks to Hive warehouse data being accessed from any other components such as Pig, MapReduce or Spark. At this point, this feature does not replace HDFS ACLs. Tables that are not associated with Sentry will retain their old ACLs.

- SELECT privilege -> Read access on the file.

- INSERT privilege -> Write access on the file.

- ALL privilege -> Read and Write access on the file.

The NameNode loads a Sentry plugin that caches Sentry privileges as well Hive metadata. This helps HDFS to keep file permissions and Hive tables privileges in sync. The Sentry plugin periodically polls the Sentry and Metastore to keep the metadata changes in sync.

For example, if Bob runs a Pig job that is reading from the Sales table data files, Pig will try to get the file handle from HDFS. At that point the Sentry plugin on the NameNode will figure out that the file is part of Hive data and overlay Sentry privileges on top of the file ACLs. As a result, HDFS will enforce the same privileges for this Pig client that Hive would apply for a SQL query.

For HDFS-Sentry synchronization to work, you must use the Sentry service, not policy file authorization. See Synchronizing HDFS ACLs and Sentry Permissions, for more details.

Search and Sentry

Sentry can apply a range of restrictions to various Search tasks, such accessing data or creating collections. These restrictions are consistently applied, regardless of the way users attempt to complete actions. For example, restricting access to data in a collection restricts that access whether queries come from the command line, from a browser, or through the admin console.

With Search, Sentry stores its privilege policies in a policy file (for example, sentry-provider.ini) which is stored in an HDFS location such as hdfs://ha-nn-uri/user/solr/sentry/sentry-provider.ini.

Note: While Hive and Impala are compatible with the database-backed Sentry service, Search still uses

Sentry’s policy file authorization. Note that it is possible for a single cluster to use both, the Sentry service (for Hive and Impala as described above) and Sentry policy files (for Solr).

Note: While Hive and Impala are compatible with the database-backed Sentry service, Search still uses

Sentry’s policy file authorization. Note that it is possible for a single cluster to use both, the Sentry service (for Hive and Impala as described above) and Sentry policy files (for Solr).For detailed documentation, see Configuring Sentry Authorization for Solr.

Authorization Administration

GRANT ROLE Analyst TO GROUP finance-managers

Disabling Hive CLI

<property> <name>hadoop.proxyuser.hive.groups</name> <value>hive,hue</value> </property>

More user groups that require access to the Hive Metastore can be added to the comma-separated list as needed.

Using Hue to Manage Sentry Permissions

Hue supports a Security app to manage Sentry authorization. This allows users to explore and change table permissions. Here is a video blog that demonstrates its functionality.

Integration with Authentication Mechanisms for Identity Management

Like many distributed systems, Hadoop projects and workloads often consist of a collection of processes working in concert. In some instances, the initial user process conducts authorization throughout the entirety of the workload or job’s lifecycle. But for processes that spawn additional processes, authorization can pose challenges. In this case, the spawned processes are set to execute as if they were the authenticated user, that is, setuid, and thus only have the privileges of that user. The overarching system requires a mapping to the authenticated principal and the user account must exist on the local host system for the setuid to succeed.

System and Service Authorization - Several Hadoop services are limited to inter-service interactions and are not intended for end-user access. These services do support authentication to protect against unauthorized or malicious users. However, any user or, more typically, another service that has login credentials and can authenticate to the service is authorized to perform all actions allowed by the target service. Examples include ZooKeeper, which is used by internal systems such as YARN, Cloudera Search, and HBase, and Flume, which is configured directly by Hadoop administrators and thus offers no user controls.

The authenticated Kerberos principals for these “system” services are checked each time they access other services such as HDFS, HBase, and MapReduce, and therefore must be authorized to use those resources. Thus, the fact that Flume does not have an explicit authorization model does not imply that Flume has unrestricted access to HDFS and other services; the Flume service principals still must be authorized for specific locations of the HDFS file system. Hadoop administrators can establish separate system users for a services such as Flume to segment and impose access rights to only the parts of the file system for an specific Flume application.

Authorization within Hadoop Projects

| Project | Authorization Capabilities |

|---|---|

| HDFS | File Permissions, Sentry* |

| MapReduce | File Permissions, Sentry* |

| YARN | File Permissions, Sentry* |

| Accumulo | |

| Flume | None |

| HBase | HBase ACLs |

| HiveServer2 | File Permissions, Sentry |

| Hue | Hue authorization mechanisms (assigning permissions to Hue apps) |

| Impala | Sentry |

| Oozie | ACLs |

| Pig | File Permissions, Sentry* |

| Search | File Permissions, Sentry |

| Sentry | N/A |

| Spark | File Permissions, Sentry* |

| Sqoop | N/A |

| Sqoop2 | None |

| ZooKeeper | ACLs |

| Cloudera Manager | Cloudera Manager roles |

| Cloudera Navigator | Cloudera Navigator roles |

| Backup and Disaster Recovery | N/A |

* Sentry HDFS plug-in; when enabled, Sentry enforces its own access permissions over files that are part of tables defined in the Hive Metastore.

| << Overview of Data Protection Mechanisms for an Enterprise Data Hub | ©2016 Cloudera, Inc. All rights reserved | Overview of Data Management Mechanisms for an Enterprise Data Hub >> |

| Terms and Conditions Privacy Policy |