Overview of Authentication Mechanisms for an Enterprise Data Hub

The purpose of authentication in Hadoop is simply to prove that users or services are who they claim to be. Typically, authentication for CDH applications is handled using Kerberos, an enterprise-grade authentication protocol. Kerberos provides strong security benefits including capabilities that render intercepted authentication packets unusable by an attacker. It virtually eliminates the threat of impersonation by never sending a user's credentials in cleartext over the network. Several components of the Hadoop ecosystem are converging to use Kerberos authentication with the option to manage and store credentials in Active Directory (AD) or Lightweight Directory Access Protocol (LDAP). The rest of this topic describes in detail some basic Kerberos concepts as they relate to Hadoop, and the different ways in which Kerberos can be deployed on a CDH cluster.

- Basic Kerberos Concepts

- Types of Kerberos Deployments

- TLS/SSL Requirements for Secure Distribution of Kerberos Keytabs

- Configuring Kerberos Authentication on a Cluster

- Authentication Mechanisms used by Hadoop Projects

For enterprise components such as Cloudera Manager, Cloudera Navigator, Hue, Hive, and Impala, that face external clients, Cloudera also supports external authentication using services such as AD, LDAP, and SAML. For instructions on how to configure external authentication, refer the Cloudera Security Guide.

Basic Kerberos Concepts

Important: If you are not familiar with how the Kerberos authentication protocol

works, make sure you refer the following links before you proceed:

Important: If you are not familiar with how the Kerberos authentication protocol

works, make sure you refer the following links before you proceed:

For more information about using an Active Directory KDC, refer the section below on Considerations when using an Active Directory KDC and the Microsoft AD documentation.

This section describes how Hadoop uses Kerberos principals and keytabs for user authentication. It also briefly describes how Hadoop uses delegation tokens to authenticate jobs at execution time, to avoid overwhelming the KDC with authentication requests for each job.

Kerberos Principals

A user in Kerberos is called a principal, which is made up of three distinct components: the primary, instance, and realm. A Kerberos principal is used in a Kerberos-secured system to represent a unique identity. The first component of the principal is called the primary, or sometimes the user component. The primary component is an arbitrary string and may be the operating system username of the user or the name of a service. The primary component is followed by an optional section called the instance, which is used to create principals that are used by users in special roles or to define the host on which a service runs, for example. An instance, if it exists, is separated from the primary by a slash and then the content is used to disambiguate multiple principals for a single user or service. The final component of the principal is the realm. The realm is similar to a domain in DNS in that it logically defines a related group of objects, although rather than hostnames as in DNS, the Kerberos realm defines a group of principals . Each realm can have its own settings including the location of the KDC on the network and supported encryption algorithms. Large organizations commonly create distinct realms to delegate administration of a realm to a group within the enterprise. Realms, by convention, are written in uppercase characters.

Kerberos assigns tickets to Kerberos principals to enable them to access Kerberos-secured Hadoop services. For the Hadoop daemon principals, the principal names should be of the format username/fully.qualified.domain.name@YOUR-REALM.COM. In this guide, username in the username/fully.qualified.domain.name@YOUR-REALM.COM principal refers to the username of an existing Unix account that is used by Hadoop daemons, such as hdfs or mapred. Human users who want to access the Hadoop cluster also need to have Kerberos principals; in this case, username refers to the username of the user's Unix account, such as joe or jane. Single-component principal names (such as joe@YOUR-REALM.COM) are acceptable for client user accounts. Hadoop does not support more than two-component principal names.

Kerberos Keytabs

A keytab is a file containing pairs of Kerberos principals and an encrypted copy of that principal's key. A keytab file for a Hadoop daemon is unique to each host since the principal names include the hostname. This file is used to authenticate a principal on a host to Kerberos without human interaction or storing a password in a plain text file. Because having access to the keytab file for a principal allows one to act as that principal, access to the keytab files should be tightly secured. They should be readable by a minimal set of users, should be stored on local disk, and should not be included in host backups, unless access to those backups is as secure as access to the local host.

Delegation Tokens

Users in a Hadoop cluster authenticate themselves to the NameNode using their Kerberos credentials. However, once the user is authenticated, each job subsequently submitted must also be checked to ensure it comes from an authenticated user. Since there could be a time gap between a job being submitted and the job being executed, during which the user could have logged off, user credentials are passed to the NameNode using delegation tokens that can be used for authentication in the future.

Delegation tokens are a secret key shared with the NameNode, that can be used to impersonate a user to get a job executed. While these tokens can be renewed, new tokens can only be obtained by clients authenticating to the NameNode using Kerberos credentials. By default, delegation tokens are only valid for a day. However, since jobs can last longer than a day, each token specifies a JobTracker as a renewer which is allowed to renew the delegation token once a day, until the job completes, or for a maximum period of 7 days. When the job is complete, the JobTracker requests the NameNode to cancel the delegation token.

Token Format

TokenID = {ownerID, renewerID, issueDate, maxDate, sequenceNumber}

TokenAuthenticator = HMAC-SHA1(masterKey, TokenID)

Delegation Token = {TokenID, TokenAuthenticator}Authentication Process

To begin the authentication process, the client first sends the TokenID to the NameNode. The NameNode uses this TokenID and the masterKey to once again generate the corresponding TokenAuthenticator, and consequently, the Delegation Token. If the NameNode finds that the token already exists in memory, and that the current time is less than the expiry date (maxDate) of the token, then the token is considered valid. If valid, the client and the NameNode will then authenticate each other by using the TokenAuthenticator that they possess as the secret key, and MD5 as the protocol. Since the client and NameNode do not actually exchange TokenAuthenticators during the process, even if authentication fails, the tokens are not compromised.

Token Renewal

- The JobTracker requesting renewal is the same as the one identified in the token by renewerID.

- The TokenAuthenticator generated by the NameNode using the TokenID and the masterKey matches the one previously stored by the NameNode.

- The current time must be less than the time specified by maxDate.

If the token renewal request is successful, the NameNode sets the new expiry date to min(current time+renew period, maxDate). If the NameNode was restarted at any time, it will have lost all previous tokens from memory. In this case, the token will be saved to memory once again, this time with a new expiry date. Hence, designated renewers must renew all tokens with the NameNode after a restart, and before relaunching any failed tasks.

A designated renewer can also revive an expired or canceled token as long as the current time does not exceed maxDate. The NameNode cannot tell the difference between a token that was canceled, or has expired, and one that was erased from memory due to a restart, since only the masterKey persists in memory. The masterKey must be updated regularly.

Types of Kerberos Deployments

Kerberos provides strong security benefits including capabilities that render intercepted authentication packets unusable by an attacker. It virtually eliminates the threat of impersonation by never sending a user's credentials in cleartext over the network. Several components of the Hadoop ecosystem are converging to use Kerberos authentication with the option to manage and store credentials in LDAP or AD. Microsoft's Active Directory (AD) is an LDAP directory that also provides Kerberos authentication for added security. Before you configure Kerberos on your cluster, ensure you have a working KDC (MIT KDC or Active Directory), set up. You can then use Cloudera Manager's Kerberos wizard to automate several aspects of configuring Kerberos authentication on your cluster.

Without Kerberos enabled, Hadoop only checks to ensure that a user and their group membership is valid in the context of HDFS. However, it makes no effort to verify that the user is who they say they are.

With Kerberos enabled, users must first authenticate themselves to a Kerberos Key Distribution Centre (KDC) to obtain a valid Ticket-Granting-Ticket (TGT). The TGT is then used by Hadoop services to verify the user's identity. With Kerberos, a user is not only authenticated on the system they are logged into, but they are also authenticated to the network. Any subsequent interactions with other services that have been configured to allow Kerberos authentication for user access, are also secured.

This section describes the architectural options that are available for deploying Hadoop security in enterprise environments. Each option includes a high-level description of the approach along with a list of pros and cons. There are three options currently available:

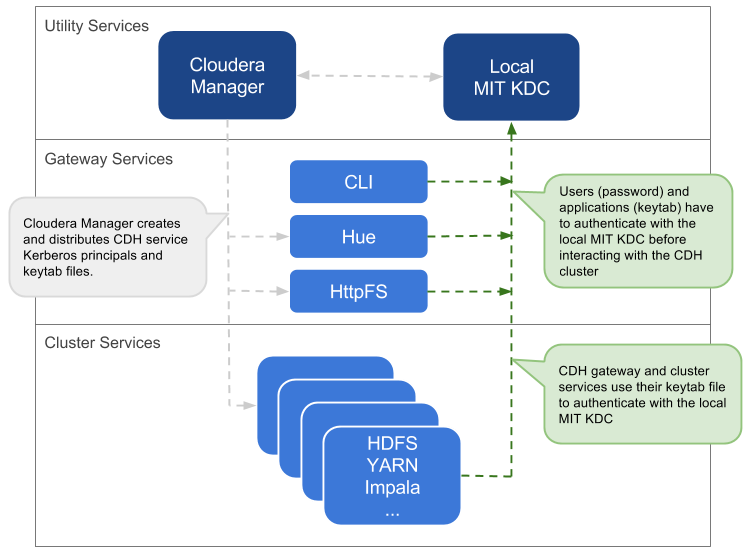

Local MIT KDC

This approach uses an MIT KDC that is local to the cluster. Users and services will have to authenticate with this local KDC before they can interact with the CDH components on the cluster.

- An MIT KDC and a distinct Kerberos realm is deployed locally to the CDH cluster. The local MIT KDC is typically deployed on a Utility host. Additional replicated MIT KDCs for high-availability are optional.

- All cluster hosts must be configured to use the local MIT Kerberos realm using the krb5.conf file.

- All service and user principals must be created in the local MIT KDC and Kerberos realm.

- The local MIT KDC will authenticate both the service principals (using keytab files) and user principals (using passwords).

- Cloudera Manager connects to the local MIT KDC to create and manage the principals for the CDH services running on the cluster. To do this Cloudera Manager uses an admin principal and keytab that is created during the security setup. This step has been automated by the Kerberos wizard. Instructions for manually creating the Cloudera Manager admin principal are provided in the Cloudera Manager security documentation.

- Typically, the local MIT KDC administrator is responsible for creating all other user principals. If you use the Kerberos wizard, Cloudera Manager will create these principals and associated keytab files for you.

| Pros | Cons |

|---|---|

| The authentication mechanism is isolated from the rest of the enterprise. | This mechanism is not integrated with central authentication system. |

| This is fairly easy to setup, especially if you use the Cloudera Manager Kerberos wizard that automates creation and distribution of service principals and keytab files. | User and service principals must be created in the local MIT KDC, which can be time-consuming. |

| The local MIT KDC can be a single point of failure for the cluster unless replicated KDCs can be configured for high-availability. | |

| The local MIT KDC is yet another authentication system to manage. |

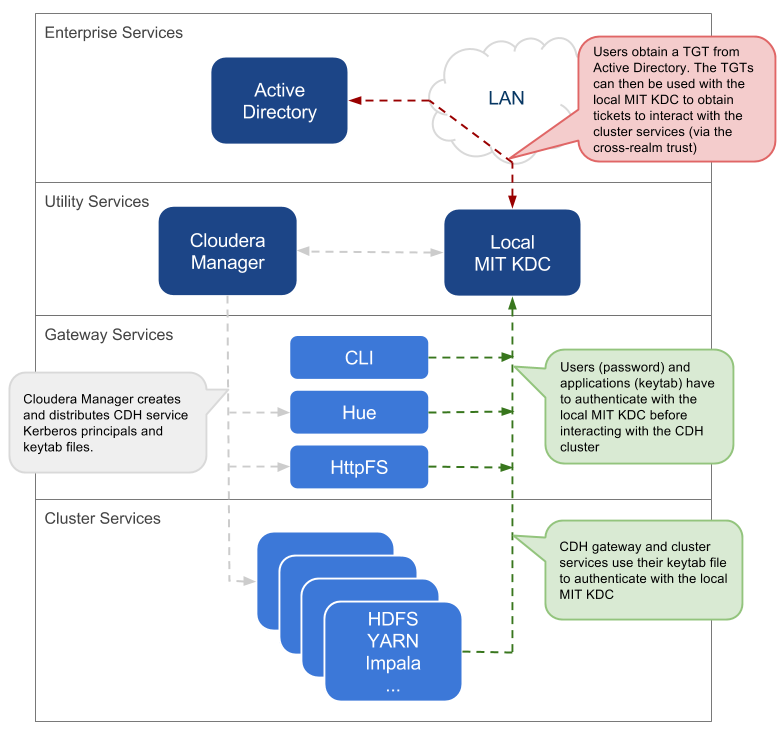

Local MIT KDC with Active Directory Integration

This approach uses an MIT KDC and Kerberos realm that is local to the cluster. However, Active Directory stores the user principals that will access the cluster in a central realm. Users will have to authenticate with this central AD realm to obtain TGTs before they can interact with CDH services on the cluster. Note that CDH service principals reside only in the local KDC realm.

- An MIT KDC and a distinct Kerberos realm is deployed locally to the CDH cluster. The local MIT KDC is typically deployed on a Utility host and additional replicated MIT KDCs for high-availability are optional.

- All cluster hosts are configured with both Kerberos realms (local and central AD) using the krb5.conf file. The default realm should be the local MIT Kerberos realm.

- Service principals should be created in the local MIT KDC and the local Kerberos realm. Cloudera Manager connects to the local MIT KDC to create and manage the principals for the CDH services running on the cluster. To do this, Cloudera Manager uses an admin principal and keytab that is created during the security setup. This step has been automated by the Kerberos wizard. Instructions for manually creating the Cloudera Manager admin principal are provided in the Cloudera Manager security documentation.

- A one-way, cross-realm trust must be set up from the local Kerberos realm to the central AD realm containing the user principals that require access to the CDH cluster. There is no need to create the service principals in the central AD realm and no need to create user principals in the local realm.

| Pros | Cons |

|---|---|

| The local MIT KDC serves as a shield for the central Active Directory from the many hosts and services in a CDH cluster. Service restarts in a large cluster create many simultaneous authentication requests. If Active Directory is unable to handle the spike in load, then the cluster can effectively cause a distributed denial of service (DDOS) attack. | The local MIT KDC can be a single point of failure (SPOF) for the cluster. Replicated KDCs can be configured for high-availability. |

|

This is fairly easy to setup, especially if you use the Cloudera Manager Kerberos wizard that automates creation and distribution of service principals and keytab files. Active Directory administrators will only need to be involved to configure the cross-realm trust during setup. |

The local MIT KDC is yet another authentication system to manage. |

| Integration with central Active Directory for user principal authentication results in a more complete authentication solution. | |

| Allows for incremental configuration. Hadoop security can be configured and verified using local MIT KDC independently of integrating with Active Directory. |

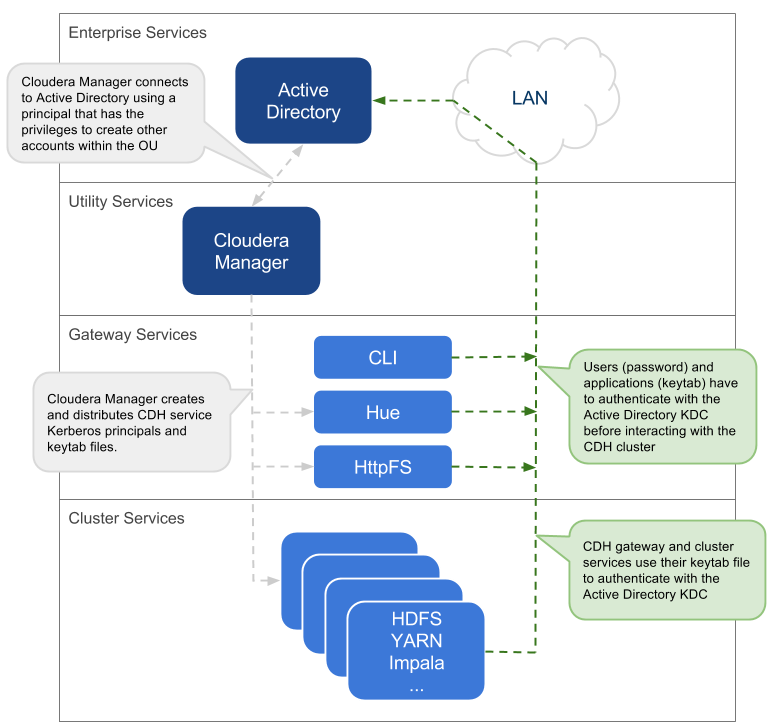

Direct to Active Directory

This approach uses the central Active Directory as the KDC. No local KDC is required. Before you decide upon an AD KDC deployment, make sure you are aware of the following possible ramifications of that decision.

TLS Setup Notes

- Set -keypass to the same value as -storepass. Cloudera Manager assumes that the same password is used to access both the key and the keystore, and therefore, does not support separate values for -keypass and -storepass.

Architecture Summary

- All service and user principals are created in the Active Directory KDC.

- All cluster hosts are configured with the central AD Kerberos realm using krb5.conf.

- Cloudera Manager connects to the Active Directory KDC to create and manage the principals for the CDH services running on the cluster. To do this, Cloudera Manager uses a principal that has the privileges to create other accounts within the given Organisational Unit (OU). This step has been automated by the Kerberos wizard. Instructions for manually creating the Cloudera Manager admin principal are provided in the Cloudera Manager security documentation.

- All service and user principals are authenticated by the Active Directory KDC.

Note: If it is not possible to create the Cloudera Manager admin principal with the required

privileges in the Active Directory KDC, then the CDH services principals will need to be created manually. The corresponding keytab files should then be stored securely on the Cloudera Manager Server

host. Cloudera Manager's Custom Kerberos Keytab Retrieval script can be used to

retrieve the keytab files from the local filesystem.

Note: If it is not possible to create the Cloudera Manager admin principal with the required

privileges in the Active Directory KDC, then the CDH services principals will need to be created manually. The corresponding keytab files should then be stored securely on the Cloudera Manager Server

host. Cloudera Manager's Custom Kerberos Keytab Retrieval script can be used to

retrieve the keytab files from the local filesystem.Identity Integration with Active Directory

A core requirement for enabling Kerberos security in the platform is that users have accounts on all cluster processing nodes. Commercial products such as Centrify or Quest Authentication Services (QAS) provide integration of all cluster hosts for user and group resolution to Active Directory. These tools support automated Kerberos authentication on login by users to a Linux host with AD. For sites not using Active Directory, or sites wanting to use an open source solution, the Site Security Services Daemon (SSSD) can be used with either AD or OpenLDAP compatible directory services and MIT Kerberos for the same needs.

For third-party providers, you may have to purchase licenses from the respective vendors. This procedure requires some planning as it takes time to procure these licenses and deploy these products on a cluster. Care should be taken to ensure that the identity management product does not associate the service principal names (SPNs) with the host principals when the computers are joined to the AD domain. For example, Centrify by default associates the HTTP SPN with the host principal. So the HTTP SPN should be specifically excluded when the hosts are joined to the domain.

-

Active Directory Organizational Unit (OU) and OU user - A separate OU in Active Directory should be created along with an account that has privileges to create additional accounts in that OU.

-

Enable SSL for AD - Cloudera Manager should be able to connect to AD on the LDAPS (TCP 636) port.

-

Principals and Keytabs - In a direct-to-AD deployment that is set up using the Kerberos wizard, by default, all required principals and keytabs will be created, deployed and managed by Cloudera Manager. However, if for some reason you cannot allow Cloudera Manager to manage your direct-to-AD deployment, then unique accounts should be manually created in AD for each service running on each host and keytab files must be provided for the same. These accounts should have the AD User Principal Name (UPN) set to service/fqdn@REALM, and the Service Principal Name (SPN) set to service/fqdn. The principal name in the keytab files should be the UPN of the account. The keytab files should follow the naming convention: servicename_fqdn.keytab. The following principals and keytab files must be created for each host they run on: Hadoop Users in Cloudera Manager and CDH.

-

AD Bind Account - Create an AD account that will be used for LDAP bindings in Hue, Cloudera Manager and Cloudera Navigator.

-

AD Groups for Privileged Users - Create AD groups and add members for the authorized users, HDFS admins and HDFS superuser groups.

- Authorized users – A group consisting of all users that need access to the cluster

- HDFS admins – Groups of users that will run HDFS administrative commands

- HDFS super users – Group of users that require superuser privilege, that is, read/wwrite access to all data and directories in HDFS

Putting regular users into the HDFS superuser group is not recommended. Instead, an account that administrators escalate issues to, should be part of the HDFS superuser group.

-

AD Groups for Role-Based Access to Cloudera Manager and Cloudera Navigator - Create AD groups and add members to these groups so you can later configure role-based access to Cloudera Manager and Cloudera Navigator.

Cloudera Manager roles and their definitions are available here: Cloudera Manager User Roles. Cloudera Navigator roles and their definitions are available here: Cloudera Navigator Data Management Component User Roles

-

AD Test Users and Groups - At least one existing AD user and the group that the user belongs to should be provided to test whether authorization rules work as expected.

TLS/SSL Requirements for Secure Distribution of Kerberos Keytabs

Communication between Cloudera Manager Server and Cloudera Manager Agents must be encrypted so that sensitive information such as Kerberos keytabs are not distributed from the Server to Agents in cleartext.

- Level 1 (Good) - This level encrypts communication between the browser and Cloudera Manager, and between Agents and the Cloudera Manager Server. See Configuring TLS Encryption Only for Cloudera Manager followed by Level 1: Configuring TLS Encryption for Cloudera Manager Agents for instructions. Level 1 encryption prevents snooping of commands and controls ongoing communication between Agents and Cloudera Manager.

- Level 2 (Better) - This level encrypts communication between the Agents and the Server, and provides strong verification of the Cloudera Manager Server certificate by Agents. See Level 2: Configuring TLS Verification of Cloudera Manager Server by the Agents. Level 2 provides Agents with additional security by verifying trust for the certificate presented by the Cloudera Manager Server.

- Level 3 (Best) - This includes encrypted communication between the Agents and the Server, strong verification of the Cloudera Manager Server certificate by the Agents, and authentication of Agents to the Cloudera Manager Server using self-signed or CA-signed certs. See Level 3: Configuring TLS Authentication of Agents to the Cloudera Manager Server. Level 3 TLS prevents cluster Servers from being spoofed by untrusted Agents running on a host. Cloudera recommends that you configure Level 3 TLS encryption for untrusted network environments before enabling Kerberos authentication. This provides secure communication of keytabs between the Cloudera Manager Server and verified Agents across the cluster.

This means, if you want to implement Level 3 TLS, you will need to provide TLS certificates for every host in the cluster. For minimal security, that is, Level 1 TLS, you will at least need to provide a certificate for the Cloudera Manager Server host, and a certificate for each of the gateway nodes to secure the web consoles.

If the CA that signs these certificates is an internal CA, then you will also need to provide the complete certificate chain of the CA that signed these certificates. The same certificates can be used to encrypt Cloudera Manager, Hue, HiveServer2 & Impala JDBC/ODBC interfaces, and for encrypted shuffle. If any external services such as LDAPS or SAML use certificates signed by an internal CA, then the public certificate of the Root CA and any intermediate CA in the chain should be provided.

Configuring Kerberos Authentication on a Cluster

Before you use the following sections to configure Kerberos on your cluster, ensure you have a working KDC (MIT KDC or Active Directory), set up.

- Cloudera Manager 5.1 introduced a new wizard to automate the procedure to set up Kerberos on a cluster. Using the KDC information you enter, the wizard will create new principals and

keytab files for your CDH services. The wizard can be used to deploy the krb5.conf file cluster-wide, and automate other manual tasks such as stopping all services,

deploying client configuration and restarting all services on the cluster.

If you want to use the Kerberos wizard, follow the instructions at Enabling Kerberos Authentication Using the Wizard.

- If you do not want to use the Kerberos wizard, follow the instructions at Enabling Kerberos Authentication Without the Wizard.

Authentication Mechanisms used by Hadoop Projects

| Project | Authentication Capabilities |

|---|---|

| HDFS | Kerberos, SPNEGO (HttpFS) |

| MapReduce | Kerberos (also see HDFS) |

| YARN | Kerberos (also see HDFS) |

| Accumulo | Kerberos (partial) |

| Flume | Kerberos (starting CDH 5.4) |

| HBase | Kerberos (HBase Thrift and REST clients must perform their own user authentication) |

| HiveServer | None |

| HiveServer2 | Kerberos, LDAP, Custom/pluggable authentication |

| Hive Metastore | Kerberos |

| Hue | Kerberos, LDAP, SAML, Custom/pluggable authentication |

| Impala | Kerberos, LDAP, SPNEGO (Impala Web Console) |

| Oozie | Kerberos, SPNEGO |

| Pig | Kerberos |

| Search | Kerberos, SPNEGO |

| Sentry | Kerberos |

| Spark | Kerberos |

| Sqoop | Kerberos |

| Sqoop2 | Kerberos (starting CDH 5.4) |

| Zookeeper | Kerberos |

| Cloudera Manager | Kerberos, LDAP, SAML |

| Cloudera Navigator | See Cloudera Manager |

| Backup and Disaster Recovery | See Cloudera Manager |

| << Security Overview for an Enterprise Data Hub | ©2016 Cloudera, Inc. All rights reserved | Overview of Data Protection Mechanisms for an Enterprise Data Hub >> |

| Terms and Conditions Privacy Policy |