Security Overview for an Enterprise Data Hub

As the adoption of Hadoop increases, the volume of data and the types of data handled by Hadoop deployments have also grown. For production deployments, a large amount of this data is generally sensitive, or subject to industry regulations and governance controls. In order to be compliant with such regulations, Hadoop must offer strong capabilities to thwart any attacks on the data it stores, and take measures to ensure proper security at all times. The security landscape for Hadoop is changing rapidly. However, this rate of change is not consistent across all Hadoop components, which is why the degree of security capability might appear uneven across the Hadoop ecosystem. That is, some components might be compatible with stronger security technologies than others.

This topic describes the facets of Hadoop security and the different stages in which security can be added to a Hadoop cluster:

The rest of this security overview outlines the various options organizations have for integrating Hadoop security (authentication, authorization, data protection and governance) in enterprise environments. Its aim is to provide you with enough information to make an informed decision as to how you would like to deploy Hadoop security in your organization. In some cases, it also describes the architectural considerations for setting up, managing, and integrating Hadoop security.

Continue reading:

Facets of Hadoop Security

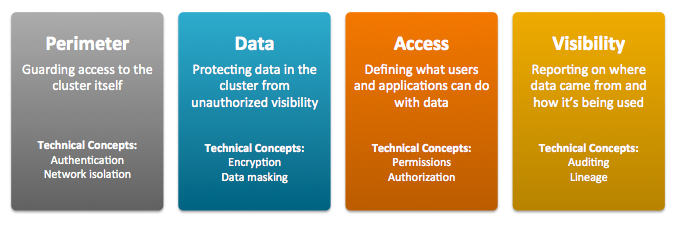

Hadoop security can be viewed as a series of business and operational capabilities, including:

- Perimeter Security, which focuses on guarding access to the cluster, its data, and its various services. In information security, this translates to Authentication.

- Data Protection, which comprises the protection of data from unauthorized access, at rest and in transit. In information security, this translates to Encryption.

- Entitlement, which includes the definition and enforcement of what users and applications can do with data. In information security, this translates to Authorization.

- Transparency, which consists of the reporting and monitoring on the where, when, and how of data usage. In information security, this translates to Auditing.

Application developers and IT teams can use security capabilities inherent in the security-related components of the Hadoop cluster as well as leverage external tools to encompass all aspects of Hadoop security. The Hadoop ecosystem covers a wide range of applications, datastores, and computing frameworks, and each of these security components manifest these operational capabilities differently.

Levels of Hadoop Security

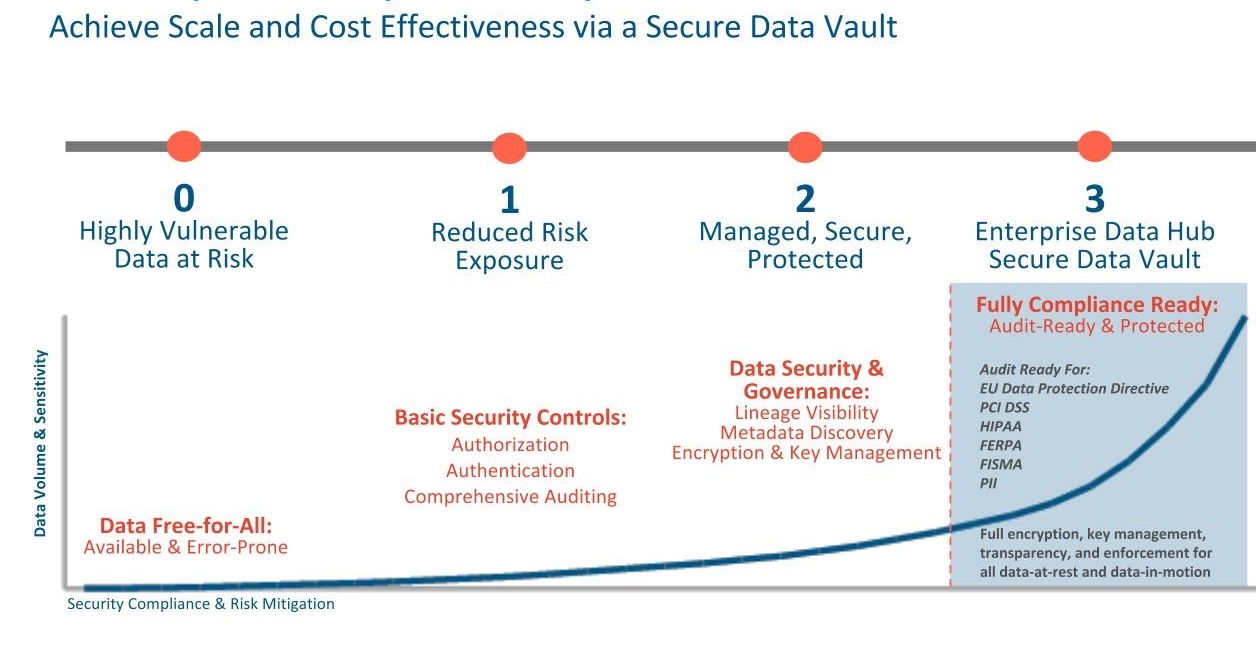

- Level 0: Cloudera recommends you first start with a fully-functional non-secure cluster before you begin adding security to it. However, a non-secure cluster is very vulnerable to attacks and must never be used in production.

- Level 1: Start with the basics. First, set up authentication checks to prove that users/services accessing the cluster are who they claim to be. You can then implement some simple authorization mechanisms that allow you to assign access privileges to users and user groups. Auditing procedures to keep a check on who accesses the cluster and how, can also be added in this step. However, these are still very basic security measures. If you do go to production with only authentication, authorization and auditing enabled, make sure your cluster administrators are well trained and that the security procedures in place have been certified by an expert.

- Level 2: For more robust security, cluster data, or at least sensitive data, must be encrypted. There should be key-management systems in place for managing encrypted data and the associated encryption keys. Data governance is an important aspect of security. Governance includes auditing accesses to data residing in metastores, reviewing and updating metadata, and discovering the lineage of data objects.

- Level 3: At this level, all data on the cluster, at-rest and in-transit, must be encrypted, and the key management system in use must be fault-tolerant. A

completely secure enterprise data hub (EDH) is one that can stand up to the audits required for compliance with PCI, HIPAA, and other common industry standards. The regulations associated with these

standards do not apply just to the EDH storing this data. Any system that integrates with the EDH in question is subject to scrutiny as well.

Leveraging all four levels of security, Cloudera’s EDH platform can pass technology reviews for most common compliance regulations.

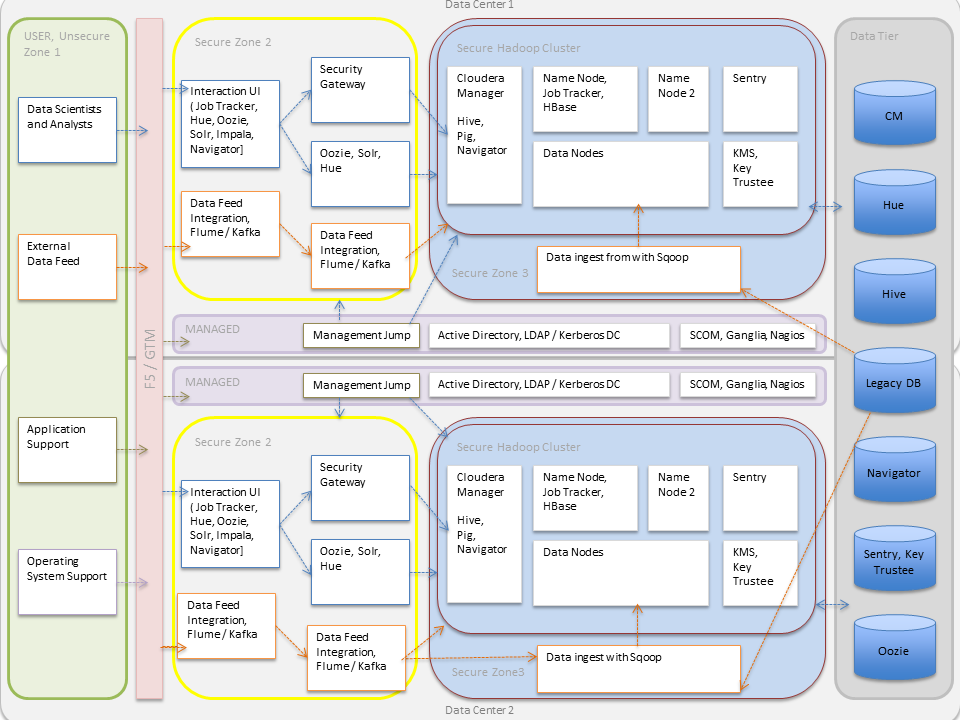

Hadoop Security Architecture

- As illustrated, external data streams can be authenticated by mechanisms in place for Flume and Kafka. Any data from legacy databases is ingested using Sqoop. Users such as data scientists and analysts can interact directly with the cluster using interfaces such as Hue or Cloudera Manager. Alternatively, they could be using a service like Impala for creating and submitting jobs for data analysis. All of these interactions can be protected by an Active Directory Kerberos deployment.

- Encryption can be applied to data at-rest using transparent HDFS encryption with an enterprise-grade Key Trustee Server. Cloudera also recommends using Navigator Encrypt to protect data on a cluster associated with the Cloudera Manager, Cloudera Navigator, Hive and HBase metastores, and any log files or spills.

- Authorization policies can be enforced using Sentry (for services such as Hive, Impala and Search) as well as HDFS Access Control Lists.

- Auditing capabilities can be provided by using Cloudera Navigator.

| << Cloudera Security | ©2016 Cloudera, Inc. All rights reserved | Overview of Authentication Mechanisms for an Enterprise Data Hub >> |

| Terms and Conditions Privacy Policy |