Upgrading to CDH 5.3 Using Packages

Minimum Required Role: Cluster Administrator (also provided by Full Administrator)

If you originally used Cloudera Manager to install CDH 5 using packages, you can upgrade to CDH 5.3 using either packages or parcels. Using parcels is recommended, because the upgrade wizard for parcels handles the upgrade almost completely automatically.

The following procedure requires cluster downtime. If you use parcels, have a Cloudera Enterprise license, and have enabled HDFS high availability, you can perform a rolling upgrade that lets you avoid cluster downtime.

To upgrade CDH using packages, the steps are as follows.

- Before You Begin

- Upgrade Unmanaged Components

- Stop Cluster Services

- Back up the HDFS Metadata on the NameNode

- Back up Metastore Databases

- Upgrade Managed Components

- Update Symlinks for the Newly Installed Components

- Run the Upgrade Wizard

- Perform Manual Upgrade or Recover from Failed Steps

- Finalize the HDFS Metadata Upgrade

- Upgrade Wizard Actions

Before You Begin

- Read the CDH 5 Release Notes.

- Read the Cloudera Manager 5 Release Notes.

- Ensure Java 1.7 is installed across the cluster. For installation instructions and recommendations, see Upgrading to Oracle JDK 1.7 in a Cloudera Manager Deployment, and make sure you have read Known Issues and Workarounds in Cloudera Manager 5 before you proceed with the upgrade.

- Ensure that the Cloudera Manager minor version is equal to or greater than

the CDH minor version. For example:

Target CDH Version Minimum Cloudera Manager Version 5.0.5 5.0.x 5.1.4 5.1.x 5.4.1 5.4.x - Date partition columns: as of Hive version 13, implemented in CDH

5.2, Hive validates the format of dates in partition columns, if they are stored as dates. A partition column with a date in invalid form can neither be used nor dropped

once you upgrade to CDH 5.2 or higher. To avoid this problem, do one of the following:

- Fix any invalid dates before you upgrade. Hive expects dates in partition columns to be in the form YYYY-MM-DD.

- Store dates in partition columns as strings or integers.

SELECT "DBS"."NAME", "TBLS"."TBL_NAME", "PARTITION_KEY_VALS"."PART_KEY_VAL" FROM "PARTITION_KEY_VALS" INNER JOIN "PARTITIONS" ON "PARTITION_KEY_VALS"."PART_ID" = "PARTITIONS"."PART_ID" INNER JOIN "PARTITION_KEYS" ON "PARTITION_KEYS"."TBL_ID" = "PARTITIONS"."TBL_ID" INNER JOIN "TBLS" ON "TBLS"."TBL_ID" = "PARTITIONS"."TBL_ID" INNER JOIN "DBS" ON "DBS"."DB_ID" = "TBLS"."DB_ID" AND "PARTITION_KEYS"."INTEGER_IDX" ="PARTITION_KEY_VALS"."INTEGER_IDX" AND "PARTITION_KEYS"."PKEY_TYPE" = 'date'; - Whenever upgrading Impala, whether in CDH or a standalone parcel or package, check your SQL against the newest reserved words listed in incompatible changes. If upgrading across multiple versions or in case of any problems, check against the full list of Impala keywords.

- Run the Host Inspector and fix every issue.

- If using security, run the The Security Inspector.

- Run hdfs fsck / and hdfs dfsadmin -report and fix every issue.

- Run hbase hbck.

- Review the upgrade procedure and reserve a maintenance window with enough time allotted to perform all steps. For production clusters, Cloudera recommends allocating up to a full day maintenance window to perform the upgrade, depending on the number of hosts, the amount of experience you have with Hadoop and Linux, and the particular hardware you are using.

- To avoid lots of alerts during the upgrade process, you can enable maintenance mode on your cluster before you start the upgrade. This will stop email alerts and SNMP traps from being sent, but will not stop checks and configuration validations from being made. Be sure to exit maintenance mode when you have finished the upgrade to re-enable Cloudera Manager alerts.

- Hue validates CA certificates and needs a truststore. To create one, follow the instructions in Hue as a TLS/SSL Client.

Upgrade Unmanaged Components

- Mahout

- Pig

- Whirr

For information on upgrading these unmanaged components, see Upgrading Mahout, Upgrading Pig, and Upgrading Whirr.

Stop Cluster Services

- On the tab, click

to the right of the cluster name and select Stop.

to the right of the cluster name and select Stop. - Click Stop in the confirmation screen. The Command Details window shows the progress of stopping services.

When All services successfully stopped appears, the task is complete and you can close the Command Details window.

Back up the HDFS Metadata on the NameNode

- CDH 5.0 or 5.1 to 5.2 or higher

- CDH 5.2 or 5.3 to 5.4 or higher

- Go to the HDFS service.

- Click the Configuration tab.

- In the Search field, search for "NameNode Data Directories" and note the value.

- On the active NameNode host, back up the directory listed in the NameNode Data Directories

property. If more than one is listed, make a backup of one directory, since each directory is a complete copy. For example, if the NameNode data directory is /data/dfs/nn, do the following as root:

# cd /data/dfs/nn # tar -cvf /root/nn_backup_data.tar .

You should see output like this:

./ ./current/ ./current/fsimage ./current/fstime ./current/VERSION ./current/edits ./image/ ./image/fsimage

If there is a file with the extension lock in the NameNode data directory, the NameNode most likely is still running. Repeat the steps, starting by shutting down the NameNode role.

Back up Metastore Databases

Back up the Hive, Sentry, and Sqoop metastore databases.- For each affected service:

- If not already stopped, stop the service.

- Back up the database. See Backing Up Databases.

Upgrade Managed Components

- Download and save the repo file.

- On Red Hat-compatible systems:

Click the entry in the table below that matches your Red Hat or CentOS system, go to the repo file for your system and save it in the /etc/yum.repos.d/ directory.

For OS Version

Click this Link

Red Hat/CentOS/Oracle 5

Red Hat/CentOS 6 (64-bit)

- On SLES systems:

- Run the following command:

$ sudo zypper addrepo -f http://archive.cloudera.com/cdh5/sles/11/x86_64/cdh/cloudera-cdh5.repo

- Update your system package index by running:

$ sudo zypper refresh

- Run the following command:

- On Ubuntu and Debian systems:

Create a new file /etc/apt/sources.list.d/cloudera.list with the following contents:

- For Ubuntu systems:

deb [arch=amd64] http://archive.cloudera.com/cdh5/ <OS-release-arch> <RELEASE>-cdh5 contrib deb-src http://archive.cloudera.com/cdh5/ <OS-release-arch> <RELEASE>-cdh5 contrib

- For Debian systems:

deb http://archive.cloudera.com/cdh5/ <OS-release-arch> <RELEASE>-cdh5 contrib deb-src http://archive.cloudera.com/cdh5/ <OS-release-arch> <RELEASE>-cdh5 contrib

where: <OS-release-arch> is debian/wheezy/amd64/cdh or ubuntu/precise/amd64/cdh, and <RELEASE> is the name of your distribution, which you can find by running lsb_release -c.

- For Ubuntu systems:

- On Red Hat-compatible systems:

- Edit the repo file to point to the release you want to install or upgrade to.

- On Red Hat-compatible systems:

Open the repo file you have just saved and change the 5 at the end of the line that begins baseurl= to the version number you want.

For example, if you have saved the file for Red Hat 6, it will look like this when you open it for editing:

[cloudera-cdh5] name=Cloudera's Distribution for Hadoop, Version 5 baseurl=http://archive.cloudera.com/cdh5/redhat/6/x86_64/cdh/5/ gpgkey = http://archive.cloudera.com/cdh5/redhat/6/x86_64/cdh/RPM-GPG-KEY-cloudera gpgcheck = 1

For example, if you want to install CDH 5.1.0, change baseurl=http://archive.cloudera.com/cdh5/redhat/6/x86_64/cdh/5/ to

baseurl=http://archive.cloudera.com/cdh5/redhat/6/x86_64/cdh/5.1.0/

In this example, the resulting file should look like this:

[cloudera-cdh5] name=Cloudera's Distribution for Hadoop, Version 5 baseurl=http://archive.cloudera.com/cdh5/redhat/6/x86_64/cdh/5.1.0/ gpgkey = http://archive.cloudera.com/cdh5/redhat/6/x86_64/cdh/RPM-GPG-KEY-cloudera gpgcheck = 1

- On SLES systems:

Open the repo file that you have just added to your system and change the 5 at the end of the line that begins baseurl= to the version number you want.

The file should look like this when you open it for editing:

[cloudera-cdh5] name=Cloudera's Distribution for Hadoop, Version 5 baseurl=http://archive.cloudera.com/cdh5/sles/11/x86_64/cdh/5/ gpgkey = http://archive.cloudera.com/cdh5/sles/11/x86_64/cdh/RPM-GPG-KEY-cloudera gpgcheck = 1

For example, if you want to install CDH 5.1.0, change baseurl=http://archive.cloudera.com/cdh5/sles/11/x86_64/cdh/5/ to

baseurl= http://archive.cloudera.com/cdh5/sles/11/x86_64/cdh/5.1.0/

In this example, the resulting file should look like this:

[cloudera-cdh5] name=Cloudera's Distribution for Hadoop, Version 5 baseurl=http://archive.cloudera.com/cdh5/sles/11/x86_64/cdh/5.1.0/ gpgkey = http://archive.cloudera.com/cdh5/sles/11/x86_64/cdh/RPM-GPG-KEY-cloudera gpgcheck = 1

- On Ubuntu and Debian systems:

Replace -cdh5 near the end of each line (before contrib) with the CDH release you need to install. Here are examples using CDH 5.1.0:

For 64-bit Ubuntu Precise:

deb [arch=amd64] http://archive.cloudera.com/cdh5/ubuntu/precise/amd64/cdh precise-cdh5.1.0 contrib deb-src http://archive.cloudera.com/cdh5/ubuntu/precise/amd64/cdh precise-cdh5.1.0 contrib

For Debian Wheezy:

deb http://archive.cloudera.com/cdh5/debian/wheezy/amd64/cdh wheezy-cdh5.1.0 contrib deb-src http://archive.cloudera.com/cdh5/debian/wheezy/amd64/cdh wheezy-cdh5.1.0 contrib

- On Red Hat-compatible systems:

- (Optionally) add a repository key:

- Red Hat compatible

- Red Hat/CentOS/Oracle 5

$ sudo rpm --import http://archive.cloudera.com/cdh5/redhat/5/x86_64/cdh/RPM-GPG-KEY-cloudera

- Red Hat/CentOS/Oracle 6

$ sudo rpm --import http://archive.cloudera.com/cdh5/redhat/6/x86_64/cdh/RPM-GPG-KEY-cloudera

- Red Hat/CentOS/Oracle 5

- SLES

$ sudo rpm --import http://archive.cloudera.com/cdh5/sles/11/x86_64/cdh/RPM-GPG-KEY-cloudera

- Ubuntu and Debian

- Ubuntu Precise

$ curl -s http://archive.cloudera.com/cdh5/ubuntu/precise/amd64/cdh/archive.key | sudo apt-key add -

- Debian Wheezy

$ curl -s http://archive.cloudera.com/cdh5/debian/wheezy/amd64/cdh/archive.key | sudo apt-key add -

- Ubuntu Precise

- Red Hat compatible

- Install the CDH packages:

- Red Hat compatible

$ sudo yum clean all $ sudo yum install avro-tools crunch flume-ng hadoop-hdfs-fuse hadoop-httpfs hadoop-kms hbase hbase-solr hive-hbase hive-webhcat hue-beeswax hue-hbase hue-impala hue-pig hue-plugins hue-rdbms hue-search hue-spark hue-sqoop hue-zookeeper impala impala-shell kite llama mahout oozie parquet pig pig-udf-datafu search sentry solr solr-mapreduce spark-python sqoop sqoop2 whirr zookeeper - SLES

$ sudo zypper clean --all $ sudo zypper install avro-tools crunch flume-ng hadoop-hdfs-fuse hadoop-httpfs hadoop-kms hbase hbase-solr hive-hbase hive-webhcat hue-beeswax hue-hbase hue-impala hue-pig hue-plugins hue-rdbms hue-search hue-spark hue-sqoop hue-zookeeper impala impala-shell kite llama mahout oozie parquet pig pig-udf-datafu search sentry solr solr-mapreduce spark-python sqoop sqoop2 whirr zookeeper - Ubuntu and Debian

$ sudo apt-get update $ sudo apt-get install avro-tools crunch flume-ng hadoop-hdfs-fuse hadoop-httpfs hadoop-kms hbase hbase-solr hive-hbase hive-webhcat hue-beeswax hue-hbase hue-impala hue-pig hue-plugins hue-rdbms hue-search hue-spark hue-sqoop hue-zookeeper impala impala-shell kite llama mahout oozie parquet pig pig-udf-datafu search sentry solr solr-mapreduce spark-python sqoop sqoop2 whirr zookeeper

Note:

Installing these packages will also install all the other CDH packages that are needed for a full CDH 5 installation.

Note:

Installing these packages will also install all the other CDH packages that are needed for a full CDH 5 installation. - Red Hat compatible

Update Symlinks for the Newly Installed Components

$ sudo service cloudera-scm-agent restart

Run the Upgrade Wizard

- Log into the Cloudera Manager Admin console.

- From the tab, click

next to the cluster name and select Upgrade Cluster. The Upgrade Wizard starts.

next to the cluster name and select Upgrade Cluster. The Upgrade Wizard starts. - In the Choose Method field, select the Use Packages option.

- In the Choose CDH Version (Packages) field, specify the CDH version of the packages you have installed on your cluster. Click Continue.

- Read the notices for steps you must complete before upgrading, click the Yes, I ... checkboxes after completing the steps, and click Continue.

- Cloudera Manager checks that hosts have the correct software installed. If the packages have not been installed, a warning displays to that effect. Install the packages and click Check Again. When there are no errors, click Continue.

- The Host Inspector runs and displays the CDH version on the hosts. Click Continue.

- Choose the type of upgrade and restart:

- Cloudera Manager upgrade - Cloudera Manager performs all service upgrades and restarts the cluster.

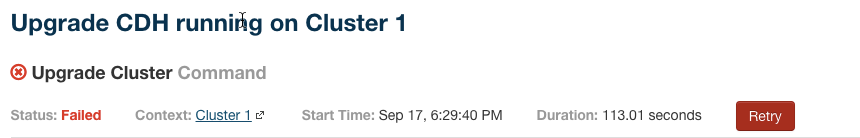

- Click Continue. The Command

Progress screen displays the result of the commands run by the wizard as it shuts down all services, activates the new parcel, upgrades services as necessary, deploys client configuration

files, and restarts services. If any of the steps fails or you click the Abort button the Retry button at the top right is

enabled.

You can click Retry to retry the step and continue the wizard or click the Cloudera Manager logo to return to the tab and manually perform the failed step and all following steps. - Click Continue. The wizard reports the result of the upgrade.

- Click Continue. The Command

Progress screen displays the result of the commands run by the wizard as it shuts down all services, activates the new parcel, upgrades services as necessary, deploys client configuration

files, and restarts services. If any of the steps fails or you click the Abort button the Retry button at the top right is

enabled.

- Manual upgrade - Select the Let me upgrade the cluster checkbox. Cloudera Manager configures the cluster to the

specified CDH version but performs no upgrades or service restarts. Manually doing the upgrade is difficult and is for advanced users only.

- Click Continue. Cloudera Manager displays links to documentation describing the required upgrade steps.

- Cloudera Manager upgrade - Cloudera Manager performs all service upgrades and restarts the cluster.

- Click Finish to return to the Home page.

Perform Manual Upgrade or Recover from Failed Steps

The actions performed by the upgrade wizard are listed in Upgrade Wizard Actions. If you chose manual upgrade or any of the steps in the Command Progress screen fails, complete the steps as described in that section before proceeding.Finalize the HDFS Metadata Upgrade

- Go to the HDFS service.

- Click the Instances tab.

- Click the NameNode instance.

- Select and click Finalize Metadata Upgrade to confirm.

Upgrade Wizard Actions

Upgrade HDFS Metadata

- CDH 5.0 or 5.1 to 5.2 or higher

- CDH 5.2 or 5.3 to 5.4 or higher

- Start the ZooKeeper service.

- Go to the HDFS service.

- Select and click Upgrade HDFS Metadata to confirm.

Upgrade the Hive Metastore Database

- CDH 5.0 or 5.1 to 5.2 or higher

- CDH 5.3 to 5.4 or higher

- Go to the Hive service.

- Select and click Stop to confirm.

- Select and click Upgrade Hive Metastore Database Schema to confirm.

- If you have multiple instances of Hive, perform the upgrade on each metastore database.

Upgrade the Oozie ShareLib

- Go to the Oozie service.

- Select and click Start to confirm.

- Select and click Install Oozie ShareLib to confirm.

Upgrade Sqoop

- Go to the Sqoop service.

- Select and click Stop to confirm.

- Select and click Upgrade Sqoop to confirm.

Upgrade the Sentry Database

- CDH 5.1 to 5.2 or higher

- CDH 5.2 to 5.3 or higher

- CDH 5.4 to 5.5 or higher

- Go to the Sentry service.

- Select and click Stop to confirm.

- Select and click Upgrade Sentry Database Tables to confirm.

Upgrade Spark

- Go to the Spark service.

- Select and click Stop to confirm.

- Select and click Install Spark JAR to confirm.

- Select and click Create Spark History Log Dir to confirm.

Start Cluster Services

- On the tab, click

to the right of the cluster name and select Start.

to the right of the cluster name and select Start. - Click Start that appears in the next screen to confirm. The Command Details window shows the progress of starting services.

When All services successfully started appears, the task is complete and you can close the Command Details window.

Deploy Client Configuration Files

- On the Home page, click

to the right of the cluster name and select Deploy Client Configuration.

to the right of the cluster name and select Deploy Client Configuration. - Click the Deploy Client Configuration button in the confirmation pop-up that appears.

| << Upgrading to CDH 5.3 Using Parcels | ©2016 Cloudera, Inc. All rights reserved | Upgrading to CDH 5.2 >> |

| Terms and Conditions Privacy Policy |