Managing Solr

You can install the Solr service through the Cloudera Manager installation wizard, using either parcels or packages. See Installing Search.

You can elect to have the service created and started as part of the Installation wizard. If you elect not to create the service using the Installation wizard, you can use the Add Service wizard to perform the installation. The wizard will automatically configure and start the dependent services and the Solr service. See Adding a Service for instructions.

- CDH 4 - Cloudera Search Documentation for CDH 4

- CDH 5 - Cloudera Search Guide

The following sections describe how to configure other CDH components to work with the Solr service.

Configuring the Flume Morphline Solr Sink for Use with the Solr Service

Minimum Required Role: Configurator (also provided by Cluster Administrator, Full Administrator)

To use a Flume Morphline Solr sink, the Flume service must be running on your cluster. See the Flume Near Real-Time Indexing Reference (CDH 4) or Flume Near Real-Time Indexing Reference (CDH 5) or information about the Flume Morphline Solr Sink and Managing Flume.

- Go to the Flume service.

- Click the Configuration tab.

- Select

- Select .

- Edit the following settings, which are templates that you must modify for your deployment:

- Morphlines File (morphlines.conf) - Configures Morphlines for Flume agents. You must use $ZK_HOST in this field instead of specifying a ZooKeeper quorum. Cloudera Manager automatically replaces the $ZK_HOST variable with the correct value during the Flume configuration deployment.

- Custom MIME-types File (custom-mimetypes.xml) - Configuration for the detectMimeTypes command. See the Cloudera Morphlines Reference Guide for details on this command.

- Grok Dictionary File (grok-dictionary.conf) - Configuration for the grok command. See the Cloudera Morphlines Reference Guide for details on this command.

If more than one role group applies to this configuration, edit the value for the appropriate role group. See Modifying Configuration Properties Using Cloudera Manager.

Once configuration is complete, Cloudera Manager automatically deploys the required files to the Flume agent process directory when it starts the Flume agent. Therefore, you can reference the files in the Flume agent configuration using their relative path names. For example, you can use the name morphlines.conf to refer to the location of the Morphlines configuration file.

Deploying Solr with Hue

Minimum Required Role: Configurator (also provided by Cluster Administrator, Full Administrator)

- Go to the Hue service.

- Click the Configuration tab.

- Type the word "snippet" in the Search box.

A set of Hue advanced configuration snippet properties displays.

- Add information about your Solr host to the Hue Server Configuration Advanced Configuration Snippet for hue_safety_valve_server.ini property. For example,

if your hostname is SOLR_HOST, you might add the following:

[search] ## URL of the Solr Server solr_url=http://SOLR_HOST:8983/solr

- Click Save Changes to save your advanced configuration snippet changes.

- Restart the Hue Service.

Important: If you are using parcels with CDH 4.3, you must register the "hue-search"

application manually or access will fail. You do not need to do this if you are using CDH 4.4 and higher.

Important: If you are using parcels with CDH 4.3, you must register the "hue-search"

application manually or access will fail. You do not need to do this if you are using CDH 4.4 and higher.

- Stop the Hue service.

- From the command line do the following:

-

cd /opt/cloudera/parcels/CDH 4.3.0-1.cdh4.3.0.pXXX/share/hue

(Substitute your own local repository path for the /opt/cloudera/parcels/... if yours is different, and specify the appropriate name of the CDH 4.3 parcel that exists in your repository.) -

./build/env/bin/python ./tools/app_reg/app_reg.py --install /opt/cloudera/parcels/SOLR-0.9.0-1.cdh4.3.0.pXXX/share/hue/apps/search

-

sed -i 's/\.\/apps/..\/..\/..\/..\/..\/apps/g' ./build/env/lib/python2.X/site-packages/hue.pth

where python2.X should be the version you are using (for example, python2.4).

-

- Start the Hue service.

Using a Load Balancer with Solr

- Go to the Solr service.

- Click the Configuration tab.

- Select

- Select

- Enter the hostname and port number of the load balancer in the Solr Load Balancer property in the format hostname:port number.

Note:

Note:

When you set this property, Cloudera Manager regenerates the keytabs for Solr roles. The principal in these keytabs contains the load balancer hostname.

If there is a Hue service that depends on this Solr service, it also uses the load balancer to communicate with Solr.

- Click Save Changes to commit the changes.

Migrating Solr Replicas

When you replace a host, migrating replicas on that host to the new host, instead of depending on failure recovery, can help ensure optimal performance.

- For adding replicas, the node parameter ensures the new replica is created on the intended host. If no host is specified, Solr selects a host with relatively fewer replicas.

- For deleting replicas, the request is routed to the host that hosts the replica to be deleted.

Adding replicas can be resource intensive. For best results, add replicas when the system is not under heavy load. For example, do not add additional replicas when heavy indexing is occurring or when MapReduceIndexerTool jobs are running.

Cloudera recommends using API calls to create and unload cores. Do not use the Cloudera Manager Admin Console or the Solr Admin UI for these tasks.

- Host names:

- origin at the IP address 192.168.1.81:8983_solr.

- destination at the IP address 192.168.1.82:8983_solr.

- Collection name email

- Replicas:

- The original replica email_shard1_replica1, which is on origin.

- The new replica email_shard1_replica2, which will be on destination.

To migrate a replica to a new host

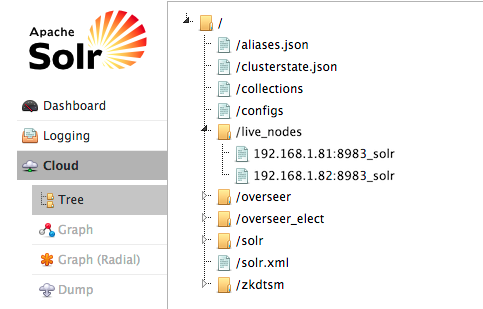

- (Optional) If you want to add a replica to a particular node, review the contents of the live_nodes directory on ZooKeeper to find all nodes available to

host replicas. Open the Solr Administration User interface, click Cloud, click Tree, and expand live_nodes. The Solr Administration User Interface, including live_nodes, might appear as follows:

Note: Information about Solr nodes can also be found in clusterstate.json, but that file only lists nodes currently hosting replicas. Nodes running Solr but not currently hosting replicas are not listed in clusterstate.json.

Note: Information about Solr nodes can also be found in clusterstate.json, but that file only lists nodes currently hosting replicas. Nodes running Solr but not currently hosting replicas are not listed in clusterstate.json. - Add the new replica on destination server using the ADDREPLICA API.

http://192.168.1.81:8983/solr/admin/collections?action=ADDREPLICA&collection=email&shard=shard1&node=192.168.1.82:8983_solr

- Verify that the replica creation succeeds and moves from recovery state to ACTIVE. You can check the replica status in the Cloud view, which can be

found at a URL similar to: http://192.168.1.82:8983/solr/#/~cloud.

Note: Do not delete the original replica until the new one is in the ACTIVE state. When the newly added replica is listed as ACTIVE, the index has been fully replicated to the newly added replica. The total time

to replicate an index varies according to factors such as network bandwidth and the size of the index. Replication times on the scale of hours are not uncommon and do not necessarily indicate a

problem.

You can use the details command to get an XML document that contains information about replication progress. Use curl or a browser to access a URI similar to:

Note: Do not delete the original replica until the new one is in the ACTIVE state. When the newly added replica is listed as ACTIVE, the index has been fully replicated to the newly added replica. The total time

to replicate an index varies according to factors such as network bandwidth and the size of the index. Replication times on the scale of hours are not uncommon and do not necessarily indicate a

problem.

You can use the details command to get an XML document that contains information about replication progress. Use curl or a browser to access a URI similar to:http://192.168.1.82:8983/solr/email_shard1_replica2/replication?command=details

Accessing this URI returns an XML document that contains content about replication progress. A snippet of the XML content might appear as follows:

... <str name="numFilesDownloaded">126</str> <str name="replication StartTime">Tue Jan 21 14:34:43 PST 2014</str> <str name="timeElapsed">457s</str> <str name="currentFile">4xt_Lucene41_0.pos</str> <str name="currentFileSize">975.17 MB</str> <str name="currentFileSizeDownloaded">545 MB</str> <str name="currentFileSizePercent">55.0</str> <str name="bytesDownloaded">8.16 GB</str> <str name="totalPercent">73.0</str> <str name="timeRemaining">166s</str> <str name="downloadSpeed">18.29 MB</str> ...

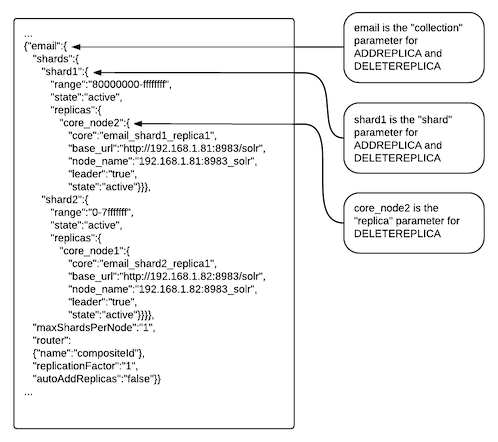

- Use the CLUSTERSTATUS API to retrieve information about the cluster, including current cluster status:

http://192.168.1.81:8983/solr/admin/collections?action=clusterstatus&wt=json&indent=true

Review the returned information to find the correct replica to remove. An example of the JSON file might appear as follows:

- Delete the old replica on origin server using the DELETEREPLICA API:

http://192.168.1.81:8983/solr/admin/collections?action=DELETEREPLICA&collection=email&shard=shard1&replica=core_node2

The DELTEREPLICA call removes the datadir.

| << Scheduling in Oozie Using Cron-like Syntax | ©2016 Cloudera, Inc. All rights reserved | Managing Spark >> |

| Terms and Conditions Privacy Policy |